Raspberry Pi 3B+ Hungs With Mmc_rescan

FleetingAfter moving from armv7 to arm64, I get from time to time those errors.

[Tue Jun 27 17:52:11 2023] INFO: task kworker/2:0:725409 blocked for more than 362 seconds.

[Tue Jun 27 17:52:11 2023] Tainted: G C 6.1.21-v8+ #1642

[Tue Jun 27 17:52:11 2023] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[Tue Jun 27 17:52:11 2023] task:kworker/2:0 state:D stack:0 pid:725409 ppid:2 flags:0x00000008

[Tue Jun 27 17:52:11 2023] Workqueue: events_freezable mmc_rescan

[Tue Jun 27 17:52:11 2023] Call trace:

[Tue Jun 27 17:52:11 2023] __switch_to+0xf8/0x1e0

[Tue Jun 27 17:52:11 2023] __schedule+0x2a8/0x830

[Tue Jun 27 17:52:11 2023] schedule+0x60/0x100

[Tue Jun 27 17:52:11 2023] __mmc_claim_host+0xbc/0x208

[Tue Jun 27 17:52:11 2023] mmc_get_card+0x3c/0x50

[Tue Jun 27 17:52:11 2023] mmc_sd_detect+0x28/0x98

[Tue Jun 27 17:52:11 2023] mmc_rescan+0xa0/0x2c8

[Tue Jun 27 17:52:11 2023] process_one_work+0x208/0x480

[Tue Jun 27 17:52:11 2023] worker_thread+0x50/0x428

[Tue Jun 27 17:52:11 2023] kthread+0xfc/0x110

[Tue Jun 27 17:52:11 2023] ret_from_fork+0x10/0x20

[Tue Jun 27 17:56:12 2023] INFO: task kworker/2:0:725409 blocked for more than 120 seconds.

[Tue Jun 27 17:56:12 2023] Tainted: G C 6.1.21-v8+ #1642

[Tue Jun 27 17:56:12 2023] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[Tue Jun 27 17:56:12 2023] task:kworker/2:0 state:D stack:0 pid:725409 ppid:2 flags:0x00000008

[Tue Jun 27 17:56:12 2023] Workqueue: events_freezable mmc_rescan

[Tue Jun 27 17:56:12 2023] Call trace:

[Tue Jun 27 17:56:12 2023] __switch_to+0xf8/0x1e0

[Tue Jun 27 17:56:12 2023] __schedule+0x2a8/0x830

[Tue Jun 27 17:56:12 2023] schedule+0x60/0x100

[Tue Jun 27 17:56:12 2023] __mmc_claim_host+0xbc/0x208

[Tue Jun 27 17:56:12 2023] mmc_get_card+0x3c/0x50

[Tue Jun 27 17:56:12 2023] mmc_sd_detect+0x28/0x98

[Tue Jun 27 17:56:12 2023] mmc_rescan+0xa0/0x2c8

[Tue Jun 27 17:56:12 2023] process_one_work+0x208/0x480

[Tue Jun 27 17:56:12 2023] worker_thread+0x50/0x428

[Tue Jun 27 17:56:12 2023] kthread+0xfc/0x110

[Tue Jun 27 17:56:12 2023] ret_from_fork+0x10/0x20

[Tue Jun 27 17:58:13 2023] INFO: task kworker/2:0:725409 blocked for more than 241 seconds.

[Tue Jun 27 17:58:13 2023] Tainted: G C 6.1.21-v8+ #1642

[Tue Jun 27 17:58:13 2023] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[Tue Jun 27 17:58:13 2023] task:kworker/2:0 state:D stack:0 pid:725409 ppid:2 flags:0x00000008

[Tue Jun 27 17:58:13 2023] Workqueue: events_freezable mmc_rescan

[Tue Jun 27 17:58:13 2023] Call trace:

[Tue Jun 27 17:58:13 2023] __switch_to+0xf8/0x1e0

[Tue Jun 27 17:58:13 2023] __schedule+0x2a8/0x830

[Tue Jun 27 17:58:13 2023] schedule+0x60/0x100

[Tue Jun 27 17:58:13 2023] __mmc_claim_host+0xbc/0x208

[Tue Jun 27 17:58:13 2023] mmc_get_card+0x3c/0x50

[Tue Jun 27 17:58:13 2023] mmc_sd_detect+0x28/0x98

[Tue Jun 27 17:58:13 2023] mmc_rescan+0xa0/0x2c8

[Tue Jun 27 17:58:13 2023] process_one_work+0x208/0x480

[Tue Jun 27 17:58:13 2023] worker_thread+0x50/0x428

[Tue Jun 27 17:58:13 2023] kthread+0xfc/0x110

[Tue Jun 27 17:58:13 2023] ret_from_fork+0x10/0x20

I disabled the swap, because I don’t want my sd card to be overused and I’d rather have some programs stopping rather than my machine becoming unresponsive. Because it is a node in nomad, I trust nomad for already stopping programs that consume too much ram.

I tried using f3 to scan the card and no issues were detected.

Also, it appears to be a well known issue.

- https://github.com/raspberrypi/linux/issues/2810

- https://github.com/agherzan/meta-raspberrypi/issues/1088

It seems to appear when the card needs to flush the dirty pages on disk. The disk is so slow compared to the RAM being filled up that linux makes a pause.

It can be due to

- a card too slow,

- a card starting to fail,

- some issues with the card reader,

Some people put the card in a SD-USB adapter and boot over USB (Pi 3 B+ booting on the usb card). This increased the speed, hence we cannot tell whether it fixes the slowness issue or some hardware issue. At least, it can tell that the sd card is not at fault.

Some also boot over a SSD drive.

Some decrease the values of vm.dirty_ratio and vm.dirty_background_ratio to make those flush happen more often, leading to decreasing overall performances but also the odds of getting into this situation. see Linux Kernel panic issue: How to fix hung_task_timeout_secs and blocked for more than 120 seconds problem

Trying to reproduce the issue

This should happen when a big file is being read (creating many dirty pages) while a process is asking for much memory.

EDIT I did not continue this

Setting the dirty_ratio values

The current value on my rpi is

ssh burberry sudo sysctl -a |grep dirty_|grep ratio

vm.dirty_background_ratio = 10

vm.dirty_ratio = 20

The suggested values are

sudo sysctl -w vm.dirty_ratio=10 sudo sysctl -w vm.dirty_background_ratio=5 sudo sysctl -p

vm.dirty_background_ratio = 5 vm.dirty_ratio = 10

It did not change the issue.

Adding reserved memory in nomad

Those machines are actually nomad agents. I added this block in their configuration.

reserved {

"memory" = 100

}

So far, so good. I did not have any issue for about a whole week.

I got a few of them today, moving on to using RPI4B instead.

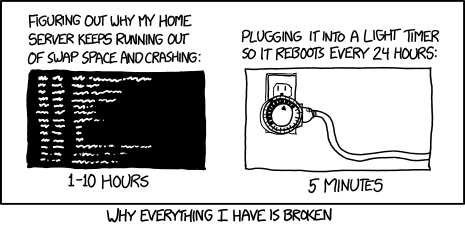

Trying soft reboot from time to time

I have solved the issue for me, but it is not a general solutions. Anyway i describe here my workarround maybe it helps some other to solve the problem. As is wrote earlier, my setup is a raspberry pi kubernetes cluster with 3 worker and 1 master. The master and 2 of the worker are Raspberry Pi 3 B+ and one worker is a older Pi 3 B. The problem happens on every worker after 24-28 hours when the worker runs my home automation software called FHEM. When the problem happens, kubernetes rescheduled FHEM to run on another worker, so every worker has the problem. FHEM collects many data from external sensors like temperature in rooms or values from the heating or wind data. This many data is written via GlusterFS to a GlusterFS cluster which is based on two Raspberry 1 with USB Sticks for the data. Kubernetes and Docker wrote their status messages and other stuff to the local SD card. My solution is to restart FHEM every night at 00:05:00 am. After that the problem never happens again, it is running stable since 5. june. The restart command shuts down the kubernetes pod and kubernetes restarts the pod after a few seconds. This means that in the background the docker container in which the FHEM runs will be shutdown and restarted.